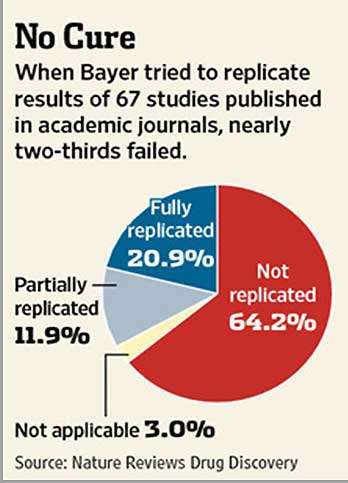

An alarming phenomenon in science that has come to our attention is the failure of many published results to reproduce. A 2011 paper in Nature Reviews: Drug Discovery by researchers from Bayer reported that nearly two thirds of their company’s in-house attempts to replicate potential drug targets in the published literature failed. A 2012 Nature article reported that researchers at the biotech firm Amgen were able to reproduce only 6 of 53 (or 11 percent) “landmark” papers in hematology and oncology. And this past May, researchers at the University of Texas MD Anderson Cancer Center reported in PLOS ONE that roughly half of researchers at their center were unable to reproduce published data on at least one occasion.

These are just a few examples of an issue that is appearing across many scientific fields. The causes of the problem range from use of inappropriate statistical and analytical methods to biases and lack of independence between academic groups, to an academic and publishing environment that rewards publishing only positive results (and publishing them rapidly).

Reproducibility is the cornerstone of the scientific method and requires methodological detail and transparency to make it happen. The high irreproducibility rate we are currently seeing in the biomedical sciences is a problem on many levels. It makes people distrust the science, it wastes limited research dollars, and it discourages industry investment. It is especially problematic for translational research. Pharmaceutical companies must go to great expense to perform their own replications of published findings in order to avoid pursuing lead compounds of uncertain promise; and these concerns pertain even to findings published in the most prestigious (and highest impact) scientific journals.

Last year, the National Institute of Neurological Disorders and Stroke held a workshop of researchers, journal editors, representatives from industry, and others to discuss what can be done about this issue. The consensus was that there is widespread poor reporting of experimental design in articles and grant applications, that animal research should follow a core set of research parameters, and that a concerted effort by all stakeholders is needed to disseminate best reporting practices and put them into practice. Subsequently an NIH ad-hoc group met to discuss how NIH institutes and centers can address the problem, and arrived at guiding principles that include improving researcher education and grant evaluation and finding ways to correct the perverse reward incentives that currently prevail for investigators.

Among several measures NIDA is taking is the issuing of a notice to investigators on improving the reporting of research methods in translational animal research. Transparency in reporting details on animal selection, sample size estimation, statistical methods, blinding, and other parameters should improve the quality of research, help other laboratories reproduce findings, and facilitate moving those findings into the clinic. NIDA will also convene workshops at upcoming scientific conferences to inform the addiction research community about the issues, and we are piloting the use of a checklist to improve the review of translational research grant applications.

This is an issue that the scientific community and I personally take very seriously. We must address the issue of reproducibility and transparency to accelerate the translation of our science into real-world treatments for addiction.