I have written before about the reproducibility crisis in the health sciences. High percentages of published findings are failing to be reproduced, and this has prompted NIH Institutes and other funding agencies, as well as scientific journals and universities, to take measures to improve how science is taught, funded, conducted, and published. Among other things, it is crucial to make sure that the less exciting work of replication (and publishing of negative results) is properly incentivized and that researchers are completely clear and transparent when describing their methods.

Like our colleagues in other Institutes, we at NIDA are committed to addressing the problem, however I have also come to think we should not let ourselves become too depressed by it. The crisis of reproducibility does not merely reflect a failure of scientists or scientific organizations; it also is an inevitable effect of the rapidly increasing power and complexity of the science we are doing. The biological systems we study are unimaginably complex, they are dynamically changing, and they have many many forms of variance that only become apparent when subjected to increasingly complex tools and methods of analysis. The complexity of the analyses we are doing now, and the huge data sets being generated, are unprecedented, so we should not be surprised that we are encountering much more fine-grained variability than we ever expected or were prepared for.

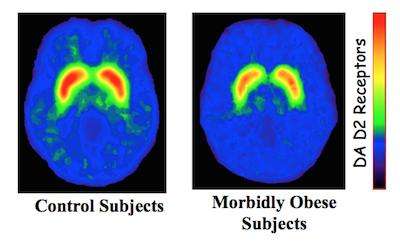

This was driven home to me recently in the context of my research on dopamine signaling in obesity. I have consistently found a significant reduction in dopamine D2 receptors in the striatum of obese individuals. However, some of my collaborators at Vanderbilt found an increase in these same receptors. When I visited the lab and met with my colleagues there, a likely reason for the discrepancy soon became apparent: Whereas I had scanned my subjects during the day, they had been scanning subjects at night. The time of day was a detail neither of us thought to consider or mention when publishing our methods, yet it turned out to make a big difference in dopamine receptor expression.

As our ability to analyze biological systems increases in resolution, so too does the number of variables that can and need to be taken into account. Even when they do everything right and act in good faith, different laboratories may be unable to replicate findings because of nuances that might not have mattered as much in an earlier era, when research focused on coarser-grained phenomena.

If anything, the reproducibility crisis gives us a humbling reminder that science is still in its adolescence; failures of replication are growing pains—and they may not end anytime soon. As the science keeps advancing at an accelerating rate, and as we bring greater resolution to bear on our objects of study, we are likely to see more and more variability of findings. In light of this, we can maximize the quality of our data by ensuring that experiments follow proper methodology, including those involved in analysis, and by enhancing the transparency of our published findings.